From phishing to fake transfer scams, social engineering is rampant.

User manipulation is responsible for a large number of cyberattacks, and the situation is not improving.

Simultaneously, the rapid development of large language models, or LLMs, and the generative AI of late raises questions about the possibilities this opens up for cybercriminals.

AI can be used at various levels to make these attacks more devastating. It allows attackers to increase detection difficulty, enhance manipulation efficiency, and scale up.

This is what we will explore in this article.

The Mechanisms of Social Engineering

Social engineering utilizes manipulation levers to induce potentially dangerous and exploitable behaviors, which would not occur otherwise.

Among other things, the attacker exploits certain levers such as authority, fear, curiosity, or urgency, aiming to influence his victim(s).

These levers aim to bypass the expected and potentially learned rational reasoning and behavior during awareness programs, in favor of more dangerous instinctive, emotional reactions.

For example, an email claiming to share an important document about upcoming salary increases will often lead to the attachment being opened. This action is motivated by curiosity and a potential lure of gain and will too infrequently be subject to a thorough examination of the sender or the likelihood of such sharing.

Detecting these attacks comes down to sharpening one's critical mind and suspicion reflexes that enable soliciting one's cognitive system and analyzing communications and clues they may present more deeply.

Conversely, the development of generative AIs and complex LLMs complicates this task.

AI Increases the Difficulty of Detecting Attacks

Recent advancements in the field of artificial intelligence and the democratization of technologies such as Large Language Models (LLMs) and generative AI bring a plethora of opportunities — and dangers — in cybersecurity.

Conversational Phishing

A concept that is dear to us at Arsen is conversational phishing.

Indeed, a "classic" phishing attempt involves an email containing a link or an attachment with an active payload, enabling the acquisition of initial access.

These phishing attempts remain effective today, but protection systems are improving: passwordless authentication, sandboxes, intelligent filters, etc.

On the other hand, anything that relies on conversation, meaning seemingly harmless interactions, is much harder to detect.

And this is where LLMs excel.

Take ChatGPT: capable of responding to you in numerous different languages, you can have a sustained conversation without any obvious indication that you are interacting with a computer program.

If this conversation used social engineering and aimed to extract sensitive information or manipulate you into performing potentially compromising actions, programmatically detecting the attack would be extremely difficult.

While yes, ChatGPT and OpenAI’s API have certain safeguards that prevent it from becoming a dangerous manipulator, the Pandora's box is nonetheless open.

Offensive LLMs like WormGPT or FraudGPT are emerging, and the development cost of specialized LLMs, starting from an open-source base, is decreasing day by day.

We are thus on the verge of large-scale scams, which are scarcely detectable with conventional protection means.

Context Creation: False Identities, Pretext Strengthening…

Every social engineering attack begins with the creation of a pretext to justify the attacker's request.

The attacker must make their request plausible in the eyes of their victim and thus create a favorable context: a false identity, a situation justifying their request — for a transfer, confidential information, action on the part of their victim...

However, context creation can be a tedious operation, and some attack attempts become evident when a thorough search around the purported identity of the attacker is conducted.

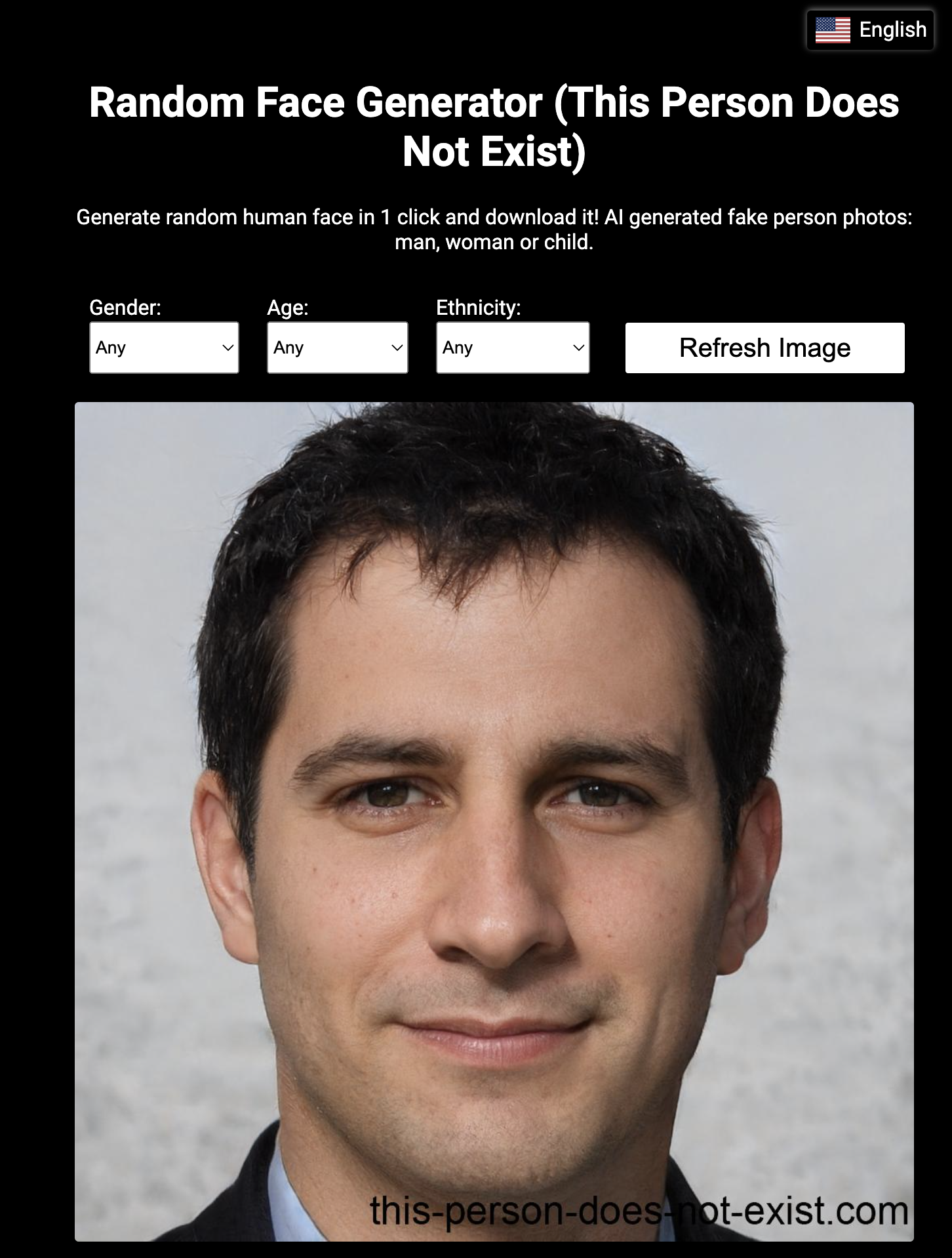

Generative AI allows for the creation of a much more detailed, deep, and realistic context.

For example, to create a fake social network profile, many attackers reuse existing profiles and steal profile pictures of existing individuals.

A reverse image search then allowed detection of the attempt and taking appropriate measures.

Thanks to Generative Adversarial Networks (GAN), it is now possible, easy, and potentially low-cost to generate false profile pictures.

Sites like ThisPersonDoesNotExist allow generating a profile picture in a few seconds that can be used to create credible fake profiles without detection by reverse image search.

It's also possible to generate entire websites, along with content, to lend more credibility to a fake company profile.

Animating a sock puppet network is also now much less costly.

A sock puppet is a fake digital identity that can be used for online influence and manipulation operations.

AI thus makes social engineering attacks much harder to detect, but it doesn’t stop there.

Beyond the context and vector used, it can also enhance the efficacy and success chances of these attempts.

AI Enhances Manipulative Efficacy

Not only does AI make attacks more elusive, but it can also significantly boost their effectiveness.

Profiling and Pretexting through AI

As mentioned earlier, the first phase of a social engineering attack involves creating a pretext.

It’s about crafting a context that legitimizes and lends credibility to the attacker’s requests.

This research phase is a preparatory work that can be time-consuming and drastically reduce the return on investment for such attacks.

Thanks to AI and especially LLMs (Language Models), it’s now possible to generate relevant pretexts on a large scale.

One need only look at email marketing and copywriting solutions that create pertinent cold emails from a LinkedIn profile.

These pretexts are not only relevant but also much less costly to deploy at scale, enabling cyber attackers to multiply their impact by changing scales.

Deep Fakes and Synthetic Media

Another aspect of generative AI involves creating synthetic media.

We briefly discussed fake profile pictures earlier, but generative AI doesn’t stop there.

It is now easy and inexpensive to create videos or voice messages by altering one's face and voice.

Voice cloning, for example, allows the usurping of someone’s identity and the utilization of their authority to make illegitimate requests.

Imagine receiving a call from your boss with an urgent request.

Many double-check processes rely on a phone call, assuming that one would recognize a fraud attempt by the voice or intonations of the interlocutor.

This check is now much less reliable.

As for video deep fakes, it is also possible to change one's face to usurp an identity live.

The identity of Patrick Hillmann, COO of Binance, was allegedly used via a deepfake in a scam targeting cryptocurrency founders.

These operations are currently rare and anecdotal. They remain marginal and expensive to deploy, but like any technological progression, deployment costs tend to decrease."

AI Enables Attackers to Scale Up

I'm not telling you anything new; there are numerous different attacks and scam attempts out there.

Some of the most dangerous ones are rarer because they require skilled labor and a significant time investment.

Attacks supported by phone or voice messages, for example, require an operator:

- Capable of adapting attack scripts to a conversation — thus a fluid situation, with its share of unforeseen events

- Mastering the victim's language

While the attacker is on the phone with their victim, they are not attacking other potential targets.

By combining technological building blocks like LLM and their ability to understand and respond in human language, Text-to-Speech and Speech-to-Text, converting text into voice messages and vice versa, these previously limited attacks can be scaled up.

With the reduction in implementation costs, a drastic increase in these attacks should be expected.

Conclusion

AI is a fantastic tool with absolutely brilliant productivity gains on the horizon.

However, these gains apply just as well to legitimate uses as they do to illicit activities.

Without fearing it, we must nonetheless prepare for this new cybersecurity context and these new attacks that will undoubtedly develop.

It is evident that AI is changing the landscape of cyber threats. This article focuses on its application to social engineering but it is also used in malware obfuscation, generating novel payloads, or creating exploits.

Of course, AI will also help strengthen our defenses. The eternal game of cat and mouse never stops between cyber attackers and cybersecurity.

As for social engineering, besides the technological protections that will undoubtedly develop — also heavily relying on these new AI developments — user training can no longer be neglected.

Indeed, the human element remains agile and adaptable, and it is our duty to strengthen ourselves against manipulation attempts.

Despite the impressive evolution of attacks, the manipulation techniques used have relied on the same psychological levers since time immemorial.

And the good news is that we can train and strengthen ourselves against these attacks right now, which is the raison d'être of Arsen.

We offer a solution based on concepts of behavioral therapies to create detection reflexes when these levers are used to manipulate us.